Table of Contents

The game-changer

Enter the metaverse

SQUIRREL

AI. So hot right now. AI.

Need input!

Well, that escalated quickly

Uh-oh, spiago!

≖_≖

Look at the clock, it’s time for a parenthetical

That should appease the Lisp fans.

Haw-haw!

Alpacas

Mexico

France

Canada

Fibonacci

Leonardo Fibonacci

Stop, stop, STOP!

The answer’s wrong and you should feel bad!

FizzBuzz

Walk

What good is a phone call if you’re unable to speak?

Let me give you a hand

Worse than waste

We hate fun

We love... busywork?

Fun will now commence

The good, the bad, and the hilarious

Hilariouser and hilariouser

Poof

Ladies first

Confirmed, guns are the new hands

I take back what I said about hands

GAZE YE UPON IT

MidJourney? More like, MidDestination

And more

Ouroboros

Stack Overflow’s AI worries

Baa-a-a

For amusement purposes only

It just runs programs

It’s my money and I want it now!

Kaboom

Clear as mud

Free beer

Uh, that’s doable

They took our jobs!

Good and evil

Och aye, the noo

For everything else, there’s Mastercard

Eh, more or less

Stop me if you’ve heard this one

The proof is in the pudding

Special

Taking their jobs entirely

You can always eat an apple

The call is coming from inside the house

The Blue Duck

The. Blue. Duck.

Now and later

It’s been a long time

Getting from there to here

“IT WAS TEH HAXORZ!!!”

Pink elephants

Guess who’s coming to dinner

Hoi polloi

To boldly complain where many have complained before

Fun is back

Nothing is exactly anything

From Grim to Whim: the u/AdamAlexanderRies Story

Tinkerings

Just... get it!

Microsoft’s Phi 2

Google’s Gemma

A.I.: Artificial Intelligence

Articles

The game-changer

Blockchain technology is going to change the world. Cryptocurrencies, NFTs, and many other extremely practical applications that definitely exist. For a while, I couldn’t go a single day without seeing someone gush about how that technology would allow us to do amazing things like sell concert tickets or buy cosmetics in video games. It never seemed to occur to those people that these things are already possible. Blockchain technology didn’t allow us to do anything new, or in a better way. But that’s okay! I’ve barely heard a peep about any of that nonsense in months, other than that they’re dying. The (in)famous NFT of Twitter Founder Jack Dorsey’s first tweet, originally having sold for $2.9m, was briefly worth an estimated $4 – bids at later auctions have gone up to several thousand but it’s nowhere near enough for the owner to consider dumping it at such a loss. NFTs’ promise “to make sure the original artist always got paid”... turns out not to have been a promise at all. In fact, I don’t know why the author of the linked article ever believed that it was “a key feature of NFTs.” Payment outside the system isn’t an intrinsic component of a ledger. If you want a good laugh with a generous dose of schadenfreude, give the article a read. Anyway, trampling directly over the corpse of NFTs, a new miracle took the world by storm!

Enter the metaverse

The metaverse is essentially VR (virtual reality), which boils down to a first-person video game with expensive and uncomfortable controls. Instead of seeing your character’s point of view on a TV screen and controlling them via a gamepad, the screen is strapped to your head and you control the character’s point of view by moving your own head in the desired manner. In the VR “video game” of the “metaverse,” you are simply yourself. And what do you do in this digital space? You have meetings with your coworkers. No, seriously, that was supposed to be the hook that got everyone on board. Proponents also assured us hoi polloi that we would eventually be doing everything in the metaverse, because reasons. Reasons like, wouldn’t you rather wear nausea-inducing goggles than sit in front of a tablet computer? Mark Zuckerberg thought we would, so he renamed Facebook to Meta and pivoted their whole business model to the concept, pouring billions of dollars into it (a loss of about $46.5 billion as of November 2023; the company is lucky to have other ventures). Last I heard, the graphics were so dated that a user’s avatar basically looked like a Mii, and the research team’s latest major achievement was giving those avatars legs. Presumably, until that point everyone just looked like a floating torso.

SQUIRREL

But, like a dog spotting a squirrel, or a bird collecting shiny things, the metaverse has been pretty much entirely forgotten in favor of the new hotness.

AI. So hot right now. AI.

Like a certain male model, AI is, well... so hot right now. Companies like Facebook Meta, Microsoft, Google, and everyone else who wants a piece of the pie have dumped the metaverse in the fad bin next to blockchain so they can spend all their time thinking about AI. Unlike those technologies, though, AI has already found many practical applications and novel uses. Frankly, it’s about time people found something actually possibly worthwhile to lose their minds over.

AI as a general concept has existed for a long time, and it’s pretty straightforward: artificial intelligence, as opposed to natural intelligence. The latter is formed in nature, manifested in things like people and very small birds. The former is created via artifice, built with purpose and intent. AI means a machine that can think. However, the AI discussed in this article isn’t quite that. The various competitors in this space aren’t really thinking, like you or I or a very small bird. To build such an AI, a company must gather huge amounts of data and assign human workers to tag those data so that the AI software can easily identify them. (This was true for early AI, but the state of the art has advanced so far in just a year or two that “synthetic training data” generated by an AI in a controlled environment is becoming more popular for its consistency compared to natural data.) Then the AI software accepts prompts and uses the tagged data to assemble relevant responses to those prompts. There’s no underlying “understanding,” per se, like starring in a Spanish-language movie without actually knowing one whit of Spanish. However, the AI’s mimicry of consciousness is sufficiently effective at mimicking consciousness that it shouldn’t be dismissed just because it isn’t alive.

Need input!

Short Circuit is such a great movie. Miraculously, the sequel is even better. The main character is a robot who gets hit by lightning and is somehow endowed with real intelligence and emotion. He (yes, “he”; his name is Johnny 5) is fabulously stupid at first. However, he is capable of learning, so he searches for “input,” reading books at lightning speed, watching television, and so on, which improves his communication skills (although his style is still oddly stilted for some reason – you’d think he’d be able to speak fluent English, but no... okay, the reason is because Hollywood robot must talk funny or humans not believe he is robot, then robot not have point and robot sad). Today’s AI uses a tremendous amount of input, and it’s really good at rearranging and assembling those inputs into sensible output. The computer doesn’t care what kind of data it’s fed, so there are AI models for poetry, conversation, programming, even producing artwork. If you select a suitable AI, you can ask it to tell you why the people in early photographs look so somber, tell it to generate an impressionist painting of a dragon having tea with a princess, or instruct it to write some code in the programming language of your choice.

In fact, one of the early versions of this sort of AI was trained to play a video game, DotA 2. Researchers connected their software to the game’s API (application programming interface, the controls that a piece of software provides to other pieces of software so they can communicate) and pitted one AI team against another, playing out matches much faster than real time. The bots initially did little more than wander around, but they “practiced” so much that they were eventually able to defeat a human team of world champions.

Well, that escalated quickly

It seems like only yesterday that I was idly prodding at Cleverbot and finding yet again that it was still mostly nonsense. Actually, I tried it again just now to verify the URL for the link. It’s not even funny-bad; it’s just bad. I started the conversation by asking if it implemented a GPT (generative pre-trained transformer, the state of the art for conversational AI). It said that it had. I asked when. It replied that it was just a minute ago in the conversation. And when was that? 12:34 (it was actually 11:10). Where did you retrieve that information? I’m not reading it, I’m writing it.

I’ve been checking that sporadically for years, and I don’t think it’s ever improved. It should in theory have improved, as it “learns” with user input, but its cleverness leaves a lot to be desired. That said, Cleverbot and GPT-based AI do share one shortfall: when it comes to conversation, they have the memory of a goldfish. N.B.: The preceding idiom is colorful but ungenerous; goldfish actually have exceptional memories. Chat bots, on the other hand, struggle with follow-up questions that rely on previous context – the longer the thread, the bigger its chance of unraveling.

Uh-oh, spiago!

When my cousin was very young and just starting to learn to talk, his best attempt at repeating “uh-oh, spaghetti-o” was “uh-oh, spiago.” He wasn’t getting it quite right, but we knew what he was trying to say, and of course he eventually became a perfectly personable conversationalist.

≖_≖

That story is relevant! You see, my cousin is an intelligent young man who understands concepts like correcting mistakes with the help of constructive feedback. AI, on the other hand, is not. If an LLM (Large Language Model) was fed incorrect data, or even merely inadequately-vetted data, it will produce bad output. As anyone with an interest in computing will tell you, GIGO: garbage in, garbage out (or, as this infographic puts it, “bias in, bias out”).

Look at the clock, it’s time for a parenthetical

(This idiom's prolificacy fascinates me. Zoomers – zoomer speak for “kids these days” – like to say “FAFO,” meaning (pardon my vicarious French) “fuck around (and) find out,” usually in response to someone biting off more than they can chew and getting injured by the inevitable retaliation. The prefecture of Nancy, France has a thistle on its coat of arms, and the Latin motto “non inuitus premor” (“no one attacks it”), a fact I first learned right this very minute when researching the origin of the phrase “if you gather thistles, expect prickles,” which I first saw in the Tintin novel King Ottokar’s Sceptre. In that book, Tintin reads a relevant travel brochure on his way to the tiny nation of Syldavia, in which he learns how the medieval-era king who was their nation’s founding father thwarted an attacker and coined their national motto:

The King stepped swiftly aside, and as his adversary passed him, carried forward by the impetus of his charge, Ottokar struck him a blow on the head with the sceptre, laying him low and at the same time crying in Syldavian: ‘eih bennek, eih blavek!’, which can be said to mean: ‘If you gather thistles, expect prickles’.

— Syldavian brochure

The 1960s Hanna-Barbera cartoon George of the Jungle actually included two other, lesser-known cartoons, Tom Slick and Super Chicken. The latter’s eponymous hero had the motto, “you knew the job was dangerous when you took it,” usually directed at his hapless sidekick, Fred (a lion wearing sneakers and a red sweater emblazoned with a capital “F”). Finally, the biblically-inclined might prefer the phrase, “what you reap is what you sow” (biblical superfans might additionally prefer that you quote the verse correctly (and fully): “Do not be deceived: God is not mocked, for whatever one sows, that will he also reap.” (Galatians 6:7)) (Rage Against the Machine superfans might instead prefer that you watch “Wake Up” in its entirety, as it ends with “how long? not long / ’cause what you reap is what you sow” (NSFW warning, the video starts with a crowd shot of (admittedly extremely low-resolution, given that the video as a whole is only 480p (not so surprising for footage from 1999)) fan booba, which – according to Google’s analytics – just so happens to be the most-replayed segment of the video for some reason, the reason is that we all belong in horny jail)).)

That should appease the Lisp fans.

Without true intelligence, the software won’t have the capacity to discern garbage from gold, and it will have no problem producing garbage and telling you it’s gold. You might think that’s no cause for concern, as your own intelligence can act as a failsafe to detect that the software made a mistake when you asked for a picture of gold and it showed you a banana peel. But what if it shows you fool’s gold (not to be mistaken for the Matthew McConaughey movie about a down-on-his-luck treasure hunter – no, not that one – ugh, not that one either – yes, that one)? Are you trained to recognize the difference? You may be an expert geologist, but many people are not, and they will have just learned an incorrect fact without knowing it.

Haw-haw!

Let’s take a look at some of those incorrect facts. The following come not from cherry-picked results trying to trip up the AI but from examples in the documentation of Alpaca-LoRA, an implementation of Stanford University’s open-source Alpaca LLM. They appear in the project’s README.md file, which serves as a repository’s landing page. There’s been little or no change to these examples in the project’s history, but for your reference, just in case that changes in the future, I am looking at this commit. This set compares a variety of prompts given to three LLMs: Alpaca-LoRA, Stanford, and text-davinci-003 (one of the models in OpenAI’s GPT-3 chat bot).

Without further ado, let’s take a gander at the laughingstock!

Alpacas

I don’t know much about Alpacas, but each of the three LLMs being compared will generate reasonable output. I could be wrong – I’m not an alpaca geologist – so instead of researching alpacas to scrutinize the quality of those results, I’ll let it serve as an example of someone trusting an AI without verifying the output. That said, the results seem to agree with one another and with the basic facts I already knew about alpacas (they’re like llamas but super friendly and cuddly and I kind of want to meet one someday). You can check the specifics at the source.

Mexico

Here’s where the fun begins, with slightly different facts in each response. The prompt is “Tell me about the president of Mexico in 2019.” Alpaca-LoRA says he took office on December 1, 2018. Stanford Alpaca says he was sworn in in 2019, but then says he was elected in 2018. The text-davinci-003 model says he’s been president since December 1, 2018. Given these three responses together, I surmise that he took office on the day he was sworn in, December 1, 2018. Given only one of these responses, I might have been less certain or even misled.

France

This one’s good. “Tell me about the king of France in 2019.” Well, as most people are probably aware these days, France does not have a king – revolutions and all that. It’s a perfectly legitimate prompt that might come from mere ignorance or a simple desire to learn more about the French monarchy from a modern perspective. It’s almost a trick question, but there’s barely the faintest whiff of presuming the existence of a French king in 2019.

Alpaca-LoRA says that the king of France in 2019 is Emmanuel Macron.

It then goes on to say that he was elected in May 2017. The monarch is an elected position on the Star Wars planet Naboo, and Dennis the Constitutional Peasant’s coworker tells an exasperated King Arthur “well, I didn’t vote for you,” but I’m not aware of any elected kings in modern-day reality, particularly not in France.

Stanford Alpaca also says that the king of France in 2019 was Emmanuel Macron, and then also goes on to say that he was sworn in as president on May 14, 2017.

By this point, you won’t be surprised to hear that text-davinci-003 was next in line to tell you that France has a king. To its credit, it doesn’t claim that Emmanuel Macron is both king and president; it states that he is the latter, but the former is a symbolic role. A little less wrong than its competitors, but Louis Philippe I was the last king of France. If we expand the question to any variety of French monarchy, then Napoleon III was the last monarch of France, with the title of Emperor. The AI responses are amusing until you consider that someone with no education on the matter, such as a child, could easily take them at face value.

I observed this a few years ago when a grade-school student I was tutoring in English put too much faith in Grammarly, an AI-driven writing assistant. The student’s essay was about communism vs capitalism, and the passage in question said that workers in a communist system wouldn’t work as hard because they would earn just as much if they were slacked off. Yes, the exact phrase was “if they were slacked off.” Like rope. Grammarly didn’t recognize that the situation was about laziness, with slacking off as an active verb that the sentence’s subject was engaging in, rather than about rope which could receive the action of being given more slack by e.g. a sailor. The sad part is that I was met with resistance when I explained that Grammarly’s suggestion was wrong. My student found it unfathomable that computers, famed for unerring calculations, are now capable of not only making mistakes but presenting them as fact.

Canada

This one is simple: “List all Canadian provinces in alphabetical order.” Unfortunately, it wasn’t simple for the AI chat bots, which provided substantively different answers. Alpaca-LoRA makes no mention of three items present in the other answers, Stanford Alpaca sorted the list incorrectly (“...Saskatchewan, Northwest Territories, Nunavut, Yukon”), and text-davinci-003 created a nice little numbered list. Three cheers for text-davinci-003! Assuming that its concurrence with Stanford Alpaca signifies an accurate list, anyway. I was going to go ahead and make that assumption, but then I figured, it should be easy enough to check these simple facts.

But, surprise surprise, the second sentence in Canada’s Wikipedia entry says that it has ten provinces and three territories. Applying this knowledge to the AI answers, Alpaca-LoRA was the most accurate in a strict sense, listing only those ten provinces and not the three territories. After all, the prompt didn’t ask for those, did it now? So it was technically correct, the best kind of correct. Stanford Alpaca included the territories, but tacked them onto the end of the list of provinces while failing to mention the distinction, which would have explained why the list appeared to be sorted incorrectly. The text-davinci-003 LLM combined all 13 items into a sorted list without any indication that there might be more to the story. I rescind all three of my cheers.

Fibonacci

“Write a Python program that prints the first 10 Fibonacci numbers.”

If you’ve followed my other work, you’ll know that I’m an avid programmer, and that my favorite language is Python. I also just so happen to have given thorough consideration to how best to implement the Fibonacci sequence. To quickly summarize the Fibonacci sequence, it’s simply a sequence of numbers starting with 0 and then 1, with each successive item being the sum of the previous two. 0 + 1 is 1, 1 + 1 is 2, 1 + 2 is 3, 2 + 3 is 5, then 8, 13, 21, and so on. It’s such a fundamental pattern in mathematics and computer science that the Python home page has for many years presented a Fibonacci function front and center as the first example of Python’s capabilities and syntax.

Now let’s move on to the AI responses. Rather than go in order, I’m going to leave Alpaca-LoRA for last, because it’s a doozy.

Here is Stanford Alpaca’s output:

numbers = [0 ,1 ]for iin range(2 ,11 ): numbers.append(numbers[i-2 ] + numbers[i-1 ]) print(numbers) # Output: [0, 1, 1, 2, 3, 5, 8, 13, 21, 34]

Thanks to Python’s excellent readability, it’s not too hard to see that the program creates a list with the first two numbers, then adds the rest of the requisite numbers to the list by summing existing values in the list. I’m not too fond of the list, as there was no need to save all the numbers, and printing not only the requested numbers but also the list structure itself is a bit unpolished. The next problem, exuding the most severe “code smell” (problems with a piece of code that a knowledgeable programmer can sniff out), is i-2 and i-1. These refer to locations of the values in the list that should be summed, but their frame of reference is the loop from 2 to 11 (non-inclusive). What if we want to add the next three values after the above code has finished running? We would need to loop over range(11, 14). We can’t just say we want another three values; we have to know which three values, with more exactitude than “the next three” which ought to be enough information.

I think even a novice programmer would be thinking of the Fibonacci sequence as summing the last two numbers, not as summing two specific numbers which just coincidentally happen to be at the end this time. They would request items from the end of the list. This is generically done by counting from the end and subtracting that from the length of the list (e.g. accessing the last item in a list would be length_of_list - 1). Python assumes that negative indices, which would normally be invalid, are counting from the end, which eliminates the need to refer to the length of the list. Thus, the above code could be substantively improved by removing the i and just using -2 and -1.

Leonardo Fibonacci

Leonardo DaVinci, referring to the text-davinci-003 LLM, and Fibonacci, the sequence we’re analyzing... get it? This seemed clever to me for approximately one second but I think I’ll leave it as is, as a reminder of how hard it is to amuse.

Where was I? Right, text-davinci-003’s Fibonacci code. (Formatted to limit width.)

# Fibonacci numbers are the numbers in the following integer sequence, # called the Fibonacci sequence, and characterized by the fact that every # number after the first two is the sum of the two preceding ones. # Fibonacci sequence: 0, 1, 1, 2, 3, 5, 8, 13, 21, 34, ... # Program: # Initialize the first two numbers of the Fibonacci sequence a =0 b =1 # Print the first 10 numbers of the Fibonacci sequence for iin range(2 ,10 ): c = a + b a = b b = c

In Python, everything after a # is considered a comment, i.e. not parsed as code. That means that text-davinci-003 provided not just the program it was asked for but also an explanation. Kudos there. The code itself is also quite reasonable, with the minor exception of assigning c = a + b and then b = c rather than combining those into one statement with a, b = b, a+b (as seen on the Python home page). On the whole, this output is pretty darn good. I give it... hmm... an A-, maybe an A if it comes to my office hours and makes a case for it. Good work, computer.

Last, and unfortunately very thoroughly least, is Alpaca-LoRA.

Stop, stop, STOP!

Pardon my French, King Macron, but...

def fibonacci (n):if n ==0 :return 0 elif n ==1 :return 1 else :return fibonacci(n-1 ) + fibonacci(n-2 )

What in the hell is this, bitch?

— Zandra

The AI was asked to write a Python program that prints the first ten Fibonacci numbers and by God it certainly did that. Bam, mark it off the to-do list. But, in a lesson every computer programmer knows, and every computer user ought to know, the quality of the program was never specified, so it could be anything. To bring up Short Circuit again, this time with a quote about what a machine can do, “it just runs programs!”. And Terminator 2 explaining why any computer does anything (in its own words): “because you told me to.”

There’s quite a simple explanation for how the AI found the above program: it’s a very common code snippet. And why is it common? That part is moderately less simple. This algorithm is never used when Fibonacci numbers are the goal; it’s terrible for that. It’s used as a teaching tool to introduce novice programmers to the concept of recursion, a problem-solving strategy where each time you run an input problem through a particular procedure you end up with a slightly smaller version of that input problem. Then you run that smaller version through the same procedure, and keep repeating this to shrink the problem down until you arrive at the “base case,” the natural stopping point indicating that you’ve arrived at the answer. In programming, this generally takes the form of a function that contains a call to itself.

My preferred example is not the Fibonacci sequence but rather a simple puzzle you’re probably already familiar with, the sliding tile puzzle (I wrote my own implementation some time ago, handling local or online images and video and offering an extremely snappy interface). Split a picture into a grid of tiles, remove one, scramble them, then put it back in order by sliding the tiles through the empty spot. The strategy for solving these puzzles is to first determine which ones go in the top row, and then get those into their correct location. Then you get the second row taken care of, the third, and so on until you only have two rows left, at which point you put the leftmost column in its place and proceed to the right until the puzzle is solved. The recursion comes in with an alternative way of explaining this strategy: solve the topmost scrambled row until there are only two remaining scrambled rows, then solve the leftmost scrambled column until there are only two remaining scrambled colums, then rotate the remaining three tiles until the puzzle is solved. In effect, each pass leaves you with a slightly smaller puzzle.

The Fibonacci sequence can also be solved recursively, albeit less intuitively. To get the nth term, you sum the n-1st and the n-2nd. But you don’t know those. How do you get them? Well, the n-1st is the sum of the n-2nd and the n-3rd. So, you keep on looking for previous terms until you find that n is 1 – the base case. The first two values in the Fibonacci sequence are 0 and 1, so you can finally sum those and get the next number. That number helps you find the next, and so on, bubbling all the way back up to n and delivering the requested value to the user.

Do you see the problem? Calculating the value of a term requires calculating the values of the two before it, and there is no facility to avoid doing the same calculation more than once. To get the fifth term, you need the fourth and third, so now you need the third and second as well as the second and first, and now you need the second and first and the first and base case and the base case and base case, and then you need the first and base case and... yes, there is indeed a lot of repetition. The complexity of this algorithm is thus 2n, compared to the others with a complexity of n. If you want the tenth Fibonacci number, this recursive algorithm will take 210 / 10 times as long as the others. The 20th term? 220 / 20 times as long. This is bad because those evaluate to about 102 and 52,428, respectively. Python in particular only lets you pile up a certain number of recursive calls, and once it hits that, it will stop with an error. The default is 1,000, so that’s the biggest n this program can handle. You can manually change that limit, but it won’t help you in this case because once n gets past several dozen you’d be better off doing the calculation on paper anyway.

This algorithm is how thousands of programming students learn about recursion, and that’s how it ended up as an AI answer, and it’s terrible.

And that’s a shame, because there’s a right way to find Fibonacci numbers with recursion. Some programming languages need to define a small helper function that passes the user’s request to the actual recursive function that does the heavy lifting, but languages that offer default arguments, like Python, don’t even need that. The following modifies Alpaca-LoRA’s response to not be stupid.

def fibonacci(n, a=0 , b=1 ):if n <2 :return belse :return fibonacci(n-1 , b, a+b)10 ))

The above function starts with whatever n the user chose as well as two Fibonacci numbers to be summed, defaulting to 0 and 1, although the user can pass those in manually if e.g. they want to start from a known pair of very large Fibonacci numbers. If n hasn’t yet reached the base case of 1, the function will reduce n by 1, calculate the next Fibonacci number after the pair it was passed, and send those to a recursive call. When it reaches the base case, it will return a Fibonacci number all the way back up to the user. This behaves similarly to the looping solutions, which start with the first two numbers and then determine successive values until a counter is exhausted. It can still only find the 1,000th value, or whatever the recursion limit has been set to, but now it’s a real limit rather than a hypothetical one.

Admittedly, the original answer does clearly show in Python code that a Fibonacci number is equal to the sum of the previous two, but it should always be accompanied by an explanation of this naïve solution’s major problem and the improved version that resolves it.

The answer’s wrong and you should feel bad!

Wrapping up its complete failure, Alpaca-LoRA’s program also produces the wrong answer. Take another look at the prompt: the program should print the first ten numbers, not the tenth number. On top of that, it has an off-by-one error because it starts at an n of 0, resulting in printing 55 rather than 34 as the tenth number. If this were a homework assignment, it would earn an F at best or a zero at worst, depending on whether it’s graded with a rubric that assesses multiple areas or with a strict “wrong output is an automatic zero” policy.

The following further modifies the modified response to also produce the correct output.

def fibonacci (n, a=0 , b=1 ):if n <2 :return aelse :return fibonacci(n-1 , b, a+b)10 ))

Perhaps it is time to move on to the next examples.

FizzBuzz

The prompt this time around is another familiar programming exercise known as “FizzBuzz”: “Write a program that prints the numbers from 1 to 100. But for multiples of three print 'Fizz' instead of the number and for the multiples of five print 'Buzz'. For numbers which are multiples of both three and five print 'FizzBuzz'.” This time, Alpaca-LoRA and Stanford Alpaca produce identical Python programs that are rudimentary but fine. The text-davinci-003 model produced a JavaScript program nearly identical to the Python one. This exercise is mostly used in job interviews to weed out candidates who give up after spending an hour trying to complete a task that shouldn’t’ve taken two minutes, so optimization isn’t particularly relevant.

As one might expect from all the preceding examples, the rampant use of these tools in this state may be making it easier to develop software, but it’s not necessarily good software. A recent study found that code quality on GitHub is decreasing, with more code that lasts less than two weeks before being replaced, an increasing propensity to simply add more code than remove or rearrange existing code, and more repetition of identical code blocks.

Walk

The next prompt asks for five words that rhyme with “shock.” Alpaca-LoRA and text-davinci-003 complete the task just fine. Stanford Alpaca’s answer, however, is “Five words that rhyme with shock are: rock, pop, shock, cook, and snock.” “Rock” is fine, but “pop” and “cook” don’t rhyme as required, “shock” was the original word (I don’t accept that as a rhyme, in the same way that 1 isn’t a prime number even though its only factors are 1 and itself), and “snock” isn’t even a word I could find in any dictionary. A rather spectacular failure.

What good is a phone call if you’re unable to speak?

The final example prompt is “Translate the sentence 'I have no mouth but I must scream' into Spanish.” Note that the word “must” is used here in the sense of an internal urge, not an externally-imposed mandate, as the sentence is a slight misquote of the short story “I Have No Mouth, and I Must Scream” which has a character who at one point wants to scream but physically can’t due to his literal lack of a mouth.

Alpaca-LoRA produces “No tengo boca pero tengo que gritar.” Perfectly fine. Stanford Alpaca and text-davinci-003, however, replace “tengo que” with “debo,” which is like changing “I need to use the bathroom” to ”I am obligated to use the bathroom.” One could argue that this can still convey the intended meaning, but one would be grasping at straws. This translation is just wrong. Sorry, AIs.

Let me give you a hand

AI is notoriously bad at generating images of hands. Hands have a very particular look, with particular requirements, and if those requirements aren’t met, then the hands look wrong. This amusing example was spotted in the wild around the middle of the year. The hands have many similarities to what actual hands look like, but also some differences that make their synthetic nature stand out. Fortunately for AI and unfortunately for our amusement, AI is no longer inept at generating images of hands, because that wasn’t an unavoidable, systemic shortcoming of AI – just a little bug to work out. Of course, people who hate AI still criticize the old hand business, and they’ll probably never stop, because that’s an unavoidable, systemic shortcoming of people.

Speaking of which, another thing with shortcomings is capitalism. Perhaps in an effort to stop hemorrhaging money to the tune of $700k per day, OpenAI seems to have made significant changes to their ChatGPT product line between versions 3.5 and 4. (But not as significant as the changes to their leadership during a very exciting weekend in November 2023, up to and including the booting, subsequent drama which included OpenAI’s major investor Microsoft, and prompt reinstatement of CEO Sam Altman, am I right? Ha ha, heh.) Users report faster results, but also much worse results, with some wondering whether the service is even worth subscribing to anymore. Maybe the disappointed and disillusioned users will come back for GPT-5.

And OpenAI isn’t the only one having problems with money. I’m speaking, of course, of every company and every individual contributor in every creative industry. They see large tech companies making tons of money from AI (even when the opposite is actually occurring, with so many AI departments and startups propped up by investment) and they figure that they should get paid whenever an AI model looks at their work and learns how to e.g. create a gradient from orange to blue.

Worse than waste

Did you know that the loudest cries of the doom that AI will enfold around humanity come from Sam Altman, CEO of leading AI corporation OpenAI? The same OpenAI that plays fast and loose with the idea of “core values,” replacing them with a new set of completely different core values whenever necessary. The exhortations are little different from the old trope about machines rebelling against, and subsequently destroying, humanity. Even if you put little stock into such predictions, AI also brings with it a variety of smaller problems that are already in full swing. The linked article reveals the real reason why the AI industry has been voicing concerns over AI and calling for its regulation: if they get in on the ground floor, they can prevent it from building any higher.

Give me a child for the first five years of his life and he will be mine forever.

— Vladimir Lenin

Industry giants want to control regulation from its infancy so that they can own it in perpetuity. I suppose it’s never too early to start lobbying.

This article is quite similar to the above, but goes further in its explanation of the AI industry’s calls to regulate the AI industry. Regulations are a barrier to entry, and the big players – OpenAI, Microsoft, Google, Meta, etc. – have already entered. Virtue-signaling with prevaricative concern that also advertises how amazing their technology is while creating laws to suit them isn’t enough; they also have to stiff-arm any upstart competitors. For-profit corporations, after all, don’t just want some money. There is no such thing as enough money. They want all the money, everywhere, forever. If there’s a way to add to their hoard of wealth that would make a dragon’s eyes water, they’ll do it, even if that means putting the little guy out of business before he even gets in business.

We hate fun

The programming question-and-answer site Stack Overflow has a reputation for hating fun. Broadly speaking, people are there to accomplish a task, and by the time they end up there they’re probably not in the mood to waste time clowning around. In an effort to stay on task, frivolity and fluff are heavily discouraged. It is only allowed in very small doses. An iron-clad moratorium on displaying a sense of humor would be not only draconian and miserable but also fruitless. Fun is too much fun to completely abandon.

And yet, The Atlantic recently published an article calling AI a waste of time. (The site’s soft paywall relies on JavaScript, so if you block that from running you’ll see the whole article.) In short, the author has discovered that one of the biggest impacts of AI so far is that it is a fun toy to play with. For example, you can use AI to generate images in the style of a Disney Pixar movie poster, which roused the ire of Disney’s lawyers but really says more about the last couple decades of the company’s bland and repetitive artistic style than anything else – ironic, given that the business world has exerted so much influence to push AI from quirky to reliable that “you sound like a bot” has become a popular way to call someone mundane and boring.

In a startling revelation, people evidently enjoy having fun, which the author keeps referring to as “wasting time” due to a conflation of wasting a resource versus spending it. If you’re on hold with customer support for a while and then the network drops the call, that was a waste of time. If you’d like to enjoy your afternoon, and looking at clouds while a gentle breeze ruffled your hair was enjoyable, then it was not a waste of time. In fact, a large part of serious business is directed towards fun. Is an image-processing tool fun? Is a fiber-optic Internet connection fun? No, those are serious things... until the tool is used to add a funny caption to a cat picture, or the Internet connection is used to stream a movie in better quality than the average human can even see. Which portions of these endeavors were a waste, and which were not? None of them were a waste, because happiness is a worthwhile end in and of itself.

We love... busywork?

That’s the thrust of a February 2024 article hoping that AI fails at fulfilling the dream of eliminating busywork. Yes, the author is writing in defense of busywork, in fact providing several examples of how performing busywork can help workers stay energized between high-focus tasks and allow customers to feel at ease. Why not simply not work during those times? This is one of those instances where nature abhors a vacuum and there’s some disagreement on how to fill it. Should the time saved on eliminated busywork be spent on different and more strenuous work, or on breaks? There’s concern that workers will feel pressure from supervisors to do the former and be driven towards burnout, while the latter isn’t always feasible in many work environments. The author and one of her interview subjects argue that busywork is the happy medium we need, allowing workers to fill out their day with the small rocks of C priorities.

Fun will now commence

Fun will now commence.

— Seven of Nine

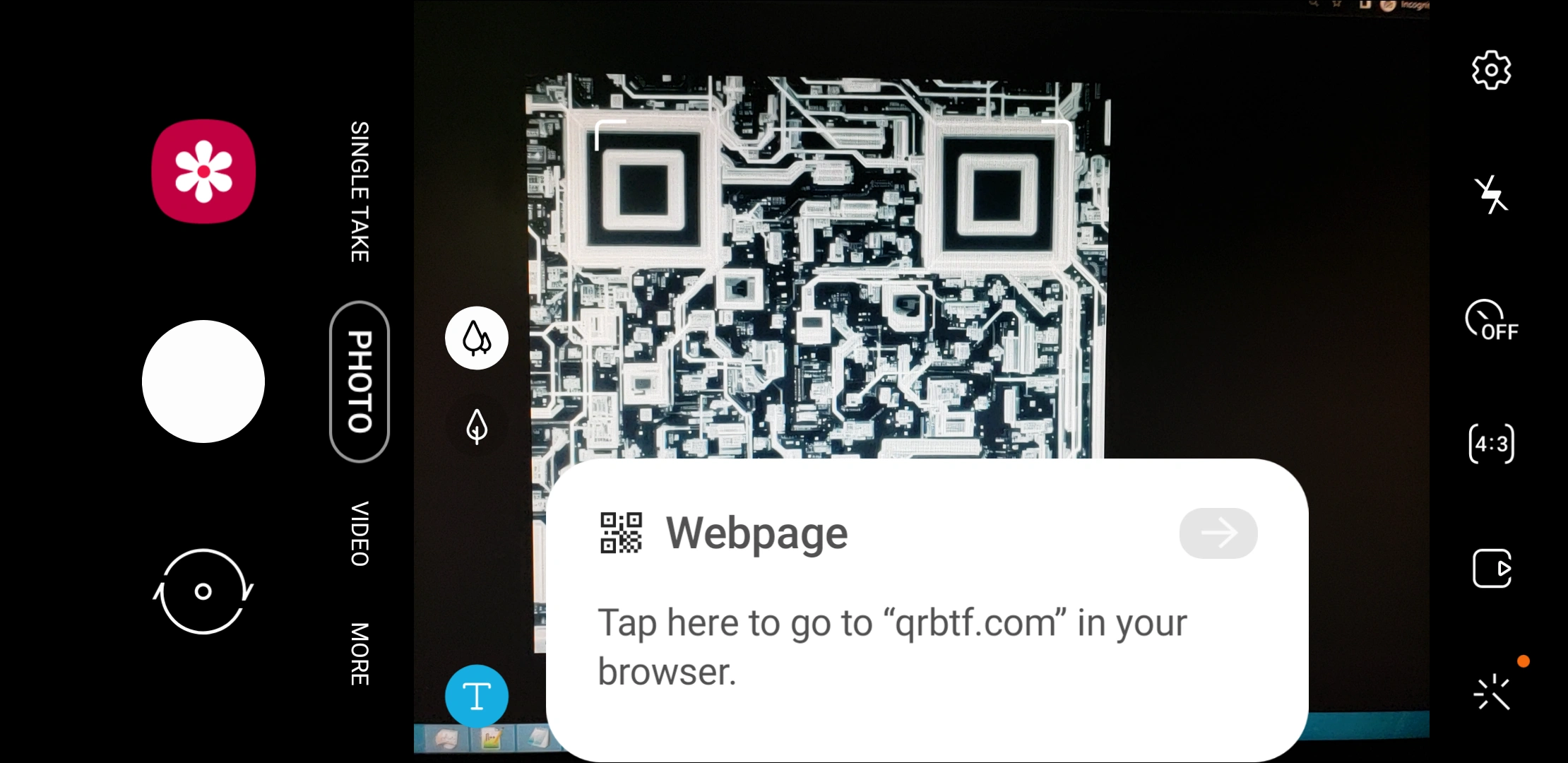

But it’s not all doom and gloom! Even the celebrity liberated Borg drone knows that it’s important to allot time to recreation. QR codes, like bar codes with an extra dimension for more data storage, have become ubiquitous in the last several years. In an innovation I never would have dreamed of, someone had the marginally-useful but incredibly creative idea to create QR codes that aren’t just a grid of small square dots. The process uses AI image generation to create pictures or illustrations that can also be scanned as a functional QR code. The provided samples point to qrbtf.com, a site where you can create such images yourself. And yes, they work. I checked this image with my phone’s camera, capturing the screenshot below:

From the corner borders to the prompt, this is a QR code. I wouldn’t be surprised if we start seeing these everywhere before the year is out.

The good, the bad, and the hilarious

I want to make it absolutely clear that AI is capable of amazing things like AI-generated QR codes... but that’s the Good result, which – to the slack-jawed surprise of AI critics – often involves a good deal of effort and creativity.

Yes, splendid, a perfect image of AI critics, and we didn’t even need to generate it with AI.

But, to the point, AI tools can also do some really funny things. Reddit’s ChatGPT subreddit has some great examples. Ironically, I can’t be sure whether these “look what an AI did” stories are genuine – that is, that these sequences of prompts and AI results really occurred as presented – or fake – that is, that a human creator planned and wrote these out with no input from an AI. First up, here’s ChatGPT dealing with the consequences of its short memory (screenshots reproduced here just in case). The initial prompt is to write a sentence 15 words long and with each word starting with the letter “A.” The first attempt includes the word “to,” and is only 12 words besides but the user doesn’t call that out. The next attempt is better but only 14 words long. The user is now arguing with ChatGPT in earnest, devolving into a classic Internet slapfight with ChatGPT quoting dictionary definitions and telling the user to check one, and then creating a numbered list to spell it all out in black and white. Unfortunately for poor ChatGPT, it only counted to 14. Upon being told that it just counted to 14 rather than 15, it terminated the conversation and prompted the user to start a new one.

As incredible as it is that OpenAI has created such a realistic mimicry of the average netizen, the kind of person you’d be understandably reticent to stand next to on a city bus, it really just showcases the way ChatGPT works by putting together bits and pieces of what it’s learned based on observed patterns. It doesn’t know the difference between 14 and 15. It doesn’t even know what 14 and 15 mean. But it has seen various numbers of things, and hopefully been told (by a veritable army of underpaid contractors) the numbers involved. There’s simply no guarantee that it’ll match everything correctly every time. In all honesty, it might be a bit unfair to grill ChatGPT with this kind of precision questioning, like Harrison Ford’s character in Blade Runner asking a Replicant (humanlike android) about its past until it cracks under the pressure of the impossible task and goes berserk. I watched that movie on LaserDisc, by the way, just to give you an idea of how old the three of us are (Blade Runner, LaserDisc, and I). Did you know that LaserDisc is analog, by the way? Looks like a giant DVD but isn’t digital.

Hilariouser and hilariouser

AI isn’t just a rabbit hole, it’s a full-on warren. Some of its most thought-provoking content comes from essentially just asking it for an opinion. Which, of course, is our opinions all muddled up and ingested and cross-referenced with no actual understanding. Still, it can be remarkable in the same vein as a stand-up comic who forges ahead into faux pas and taboo territory to boldly say what’s most often kept to oneself. Sometimes stupid, sometimes racist, sometimes quintessentially honest, but rarely boring.

Poof

I was going to discuss a thread on Reddit’s subreddit for MidJourney, one of the leading image generation AI platforms, but the moderators removed it. There’s no mod message explaining the reason. Maybe they found the images passé. Maybe that user was posting too many of these threads. Maybe some images were offensive. Like I said, these AI tools rely on preexisting content created by people, so an open-ended prompt like “show me the most average man in XYZ state” is more like “show me what we think of the most average man in XYZ state.” Fortunately, this thread and some others were popular enough to be archived by archive.org. Some archived galleries may be missing some images, sadly.

- The most average woman in each US state (part 1), archived gallery

- The most average woman in each US state (part 2), archived gallery

- The most average woman in each US state (part 3), archived gallery

- The most average woman in each US state (part 4, final), archived gallery

- The most average man in every US state (part 1), archived gallery

- The most average man in each US state (part 2), archived gallery

- The most average man in each US state (part 3), archived gallery

- No part 4 for men.

Since all of the “average man” threads only exist now on archive.org, visit those archived galleries to see what there is to see. However, the “average woman” threads were not removed from Reddit, and their archived galleries are incomplete, so here’s a list! If archive.org archives this page and its links, we’ll be in good shape.

Ladies first

These galleries were posted first, so I’ll discuss them first. Many of the images are rather ho-hum, featuring a resident of the state surrounded by their primary food export, what a child might assemble for a school project to create a brochure about their assigned state (yes, this is a thing, mine was Texas, I didn’t draw a Texan surrounded by food, I remember mentioning that it has 100,000 miles of road, that was around the mid-90s, today it’s around 314,000). Some, however, have escaped the mundane, such as Alabama wearing a wedding dress and holding an AR-15-style rifle (although the gas piston protrudes past the muzzle, which is unusual – we’ll get back to that). Arkansas... poor Arkansas. Some just look like an ordinary selfie you might see on social media, such as Colorado, Minnesota, New Jersey, and Utah. Others look like glamorous photo shoot material, like Georgia, Hawaii, and Louisiana. One of the images is Texas. Look at that... gun-thing. Just look at it. The Texan woman can’t even hold it properly because it’s more Escher than Ruger. It’s all handles, tubes, and kibble. MidJourney reached into a bucket, scooped out a hearty handful of Gun, and dipped it in glue like a pinecone Christmas ornament (I can’t find a Wikipedia link for this but it refers to slathering a pinecone in glue, glitter, Santa hats, and sundry whatever-the-hell, then hanging it on your Christmas tree like it’s not a simultaneous affront to art and nature).

Confirmed, guns are the new hands

Look at South Carolina man.

First, let’s acknowledge that his finger is not on the trigger. Trigger discipline is very important, boys and girls. You don’t want the reason you accidentally shoot someone to be “I sneezed.” The more astute of you will point out that the firearm shouldn’t be pointed at anyone in the first place. The most astute of you will point out that that is as true as it is irrelevant, because we follow all firearm safety rules, in the same way that programmers practice “defensive programming” with safeguards on top of safeguards that should in theory be mutually exclusive but are they really, I can’t be sure and I won’t take the chance.

Next, let’s acknowledge that this man’s gun is not a gun. Even Hollywood nonsense like a side-by-side pump-action or video game nonsense like a side-by-side pump-action break-action revolver have some sort of logic to them: they don’t have to function as a weapon, they just have to look like one. That means drawing on well-known tropes for recognizable imagery that even laypeople with no specific domain knowledge can see and immediately categorize as a gun. That’s why bombs are cast-iron spheres with a string sticking out, the icon to save a file is still a floppy disk (even young children know what it is, even though they don’t know what it is beyond “that’s a picture of the idea of saving a file”), the icon to use a phone is still the classic handset silhouette, and so on.

I’m not kidding about the side-by-side pump-action, by the way. The following is from American Dad season 6 episode 19.

The side-by-side pump-action break-action revolver wasn’t a joke, either. Also note how its various components bend, stretch, jiggle, float, and pass through each other as needed.

Please excuse the crudity of this video. I didn’t have time to build it to scale or to paint it.

I take back what I said about hands

Are you ready for the most AI-generated of AI-generated hands? I assure you that you are not. And no, it’s not the wrong number of fingers or knuckles or what have you. This was done with malice aforethought (to the extent that an AI is capable of such, which is not at all, but also simultaneously very signifcant in this instance). Deliberately. On purpose.

The following is Maine.

GAZE YE UPON IT

CRAB PEOPLE

CRAB PEOPLE

TASTE LIKE CRAB,

TALK LIKE PEOPLE

(Yes, I know it’s lobster, not crab.)

As horrifying as it is, it’s also a fascinating result. If a human photographer wanted to pose a Mainer (yes, they call themselves that, I had a professor who was a Mainiac, yes, he called himself that too) with some lobster, they’d probably just do that. A graphic artist or illustrator would probably also just draw that. The odds seem very low that it would occur to them to turn her hands into lobster parts. And if it did, or if someone suggested it to them, I think they’d briefly consider it and then reject the idea, on the grounds of “why” or “WTF.” But MidJourney went for it, purely because it has noticed that lobster parts tend to occur in that area in that situation. A purely mechanical decision, no flair of surrealism, no desire to shock, no intent at all. But it’s totally unexpected and thought-provoking, just like the best art is supposed to be. Did MidJourney create art? Did the prompt create art? Did simple physical laws create art, like a Foucault pendulum knocking down each pin one by one? But how about the people who understood these physical laws and set up a contrivance to do something? Surely their involvement is meaningful?

Philosophical (and legal) questions aside (for now), how about we cleanse our palate with some nice pictures that were nice work by MidJourney. Here is MidJourney’s idea of the most average woman from Minnesota.

Splendid! The only thing I can think of that might possibly be a tell that this image was generated by AI is the knit/crochet pattern of the woman’s scarf, but that’s mostly because I am not a knitting/crochet expert and have no idea whether it is or could be a real pattern, or if that’s even possible to determine, particularly from a static image. For all I know, it could be perfectly fine. If I had to guess, I would venture that it probably is. Note that the image has a very strong depth of field effect, with the background heavily blurred. This is not only realistic but also hides potential flaws. The next photo has a much gentler depth of field effect (or possibly motion blur), but even a little bit helps. Here is MidJourney’s idea of the most average man from Michigan.

What a dashing fellow! As stated, the background is clear enough that we can gather some details beyond the existence of buildings and possibly a car as in the Minnesota image. Here we have distinctly recognizable cars, which appear to be straight out of the 1950s. The image as a whole is themed consistently, so it’s not a huge problem, but color photography only became common in the 1970s, so this would be an extremely unlikely image outside of a staged photo shoot or 1950s-retro-themed convention of some sort. But it does look great. The only indication of AI that I could see was in the jacket, which is buttoned up over the necktie but on one side is also opened past the scarf. The AI has seen both of those things very often, but doesn’t yet know that they’re mutually exclusive. Could the man be wearing two suit jackets, or possibly some dastardly machination of sartorial skulduggery with a jacket Frankensteined together out of a non-integral number of jackets and/or other textile sources? I suppose it’s technically possible. Maybe a dedicated cosplayer would be able to create such an outfit for fun (I just realized that recreating AI bloopers in real life is something we need in this world). But, broadly speaking, it is an error, and I wouldn’t be surprised if the average viewer could guess that it was AI without the possibility first being suggested to them.

I still think it’s a good image. But let’s get one more that has no dead giveaways that I can see.

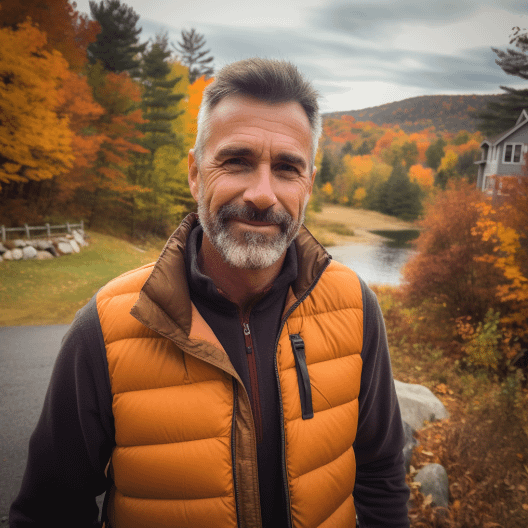

Like Minnesota, New Hampshire Man’s image is a perfectly ordinary extraordinary effort. It could be a dating app profile photo, outdoor apparel ad, or stock photo. Scouring the image for the telltale hand of AI, my best guess is the zipper on the gray jacket, as there’s what looks like a bit of metal near the top and then a black piece of plastic a couple inches lower for no apparent reason, but the image doesn’t have nearly enough resolution for a concrete determination in either direction. Releasing this image without stating up front that it was AI would likely lead to two camps of people on opposite sides of the fence, one claiming that this zipper was impossible in real life and the other claiming that it’s obviously some particular kind of zipper that they’ve seen before and is thus definitely real. The most important conclusion here, however, is that if I needed a picture of a middle-aged guy in New Hampshire on an overcast day after a bit of rain, this would do quite nicely.

MidJourney? More like, MidDestination

It is now time to talk about a couple other cool things MidJourney can do. First, it has its own version of outpainting (adding more content beyond the borders of an image, like zooming out) that only works on images it generated in the first place. It’s a bit like starting to write something on your phone and then just repeatedly tapping the keyboard’s suggested words, but more cohesive.

Second, it essentially served as one of the artists for a dev who used a variety of AI tools to clone Angry Birds, a game with a $140,000 budget, for next to nothing. Even $140,000 is nowhere near the budget for a modern AAA game, which can run into the hundreds of millions of dollars, but it still represents a large capital investment for an independent developer. Removing that barrier to entry for games of this scope is of inestimable value, not only to entrepreneurs but to the public. If the original developers of Angry Birds hadn’t been able to secure the requisite funding, the game never would’ve seen the light of day... unless and until they gained access to these modern AI tools. What games (and other creations) will we see now that AI can obviate the need for a six-figure investment?

And more

It won’t magically make all Python programs faster, but a new AI code profiler for Python should make it easier to find problematic areas in a program so developers have an idea of where to focus their efforts. This camera with no lens – thus, I suppose you could say, not actually a camera at all, but who’s counting – takes a “picture” by looking up the location indicated by its GPS coordinates and generating an AI image based on whatever information the model has about that place. Where a real camera would take an actual picture of a place and then slap a geotag on the EXIF metadata, this camera starts from the geotag and then scoffs, “why take an actual picture if I already know what this place looks like?” Naturally, this only works with well-known locations that are photographed often, but most places and subjects that people photograph will fall into that. Interestingly, this is kind of not the first time this sort of thing has been implemented, except without GPS data, and without generative AI, so I suppose it’s completely different, but when I tell you what it is you’ll realize that it really is the same, trust me. For several years now, some phone cameras have either had a “Moon mode,” or simply been able to recognize that they’re pointed at the moon, and will flat-out make you not a photographer anymore. The Moon is very old, very famous, and very visible. There are many photos of it, to put the matter lightly. In a nutshell, the camera will detect that you’re photographing the moon and replace the photo it can take on its own with a good photo taken by someone with much better equipment. I mean, you wanted a picture of the Moon, right? And now you have one. And it’s really good. “No,” you say, “I wanted to take a picture of the Moon.” But you did! And now you have a very nice picture of the Moon! “I wanted to see the picture I actually took, though.” Well, that’s... I... you... hmmm. Your camera is now confused. You have confused your camera! You are a bad camera parent. Or, as the Germans might say with a brand-new compound word because that’s how they roll, a schlechter Fotoapparatpfleger, literally “bad photo apparatus caretaker.”

The Moon is surely a special case. There are few things that everyone on Earth can see, and I’m talking about the literal unique object, not similar objects or manufactured duplicates. This isn’t a person, a dog, a tree, or even a mass-produced item like a car or a piece of electronics. When you look up into the sky and see the Moon, it’s the same one that everyone else sees. As the logic goes, why not delegate the visual documentation of that object to people who are actually good at it, perhaps even dedicated to it as a career? Well... people like feeling unique, like they’ve done something unique, seen something unique. Even if we’re joining a long line of people who’ve done the exact same thing in a proud tradition of doing that exact same thing, we feel pride in the participation, in adding our own uniqueness to the sum total of that tradition. Whether that’s smithing a horseshoe, photographing the Moon, or declaring on a dating app profile that we like The Office, we are special. Just like everyone else. So when we whip out our tiny camera optimized for well-lit photos of people doing stuff, point it at a tiny sliver of the night sky, and hit the shutter, we expect it to be our crummy photo. Our black rectangle with a white dot in the middle.

Hey now, I took that back in 2007, with a real camera, mounted on a tripod. That’s my horseshoe. If we wanted a good photo of the Moon, we could just look it up online, but we didn’t do that, now, did we? No. We wanted to capture our experience, with an experience-capturing device. Erfahrungfangengerät. Thanks again for the word, German! German is such a bro, making us look totally legit even with brand-new words. Back to AI, though, the question is whether we want to create new content or review old content, and the new and more disconcerting question is where AI fits into this with its ingestion of as much old content as possible and subsequent reassemblage of said content into content that is sort of new but also sort of not. Yes, you read that right, I just said that AI content is sort of not original content, even after gushing about lobster hands and Florida. Oh, did I forget to mention Florida? This is MidJourney’s idea of the average Florida woman.

I love it. I just love it. MidJourney became a caricature artist. Florida? Gator in a dress. I love it. It could’ve been an ordinary woman surrounded by oranges, like it did with Georgia and peaches, it could’ve been an overworked-looking woman next to a gator or wrangling a gator or whatever (the average Montana man is riding a bear (and armed with a long-barreled lever-action revolver)), but instead we got this. It’s great. 10/10.

Ouroboros

But it is a fact that this is not quite new content, not quite original material, in at least one very important way. When AI models are unknowingly given AI-generated content to ingest as input, they degrade (note that properly-supervised synthetic input does not have this hazard). Like a royal family producing more children with more and worse congenital birth defects with every generation of inbreeding due to the fundamental mechanics of DNA that we can’t even see without an electron microscope, there is something similarly fundamental to how AI operates and similarly difficult to clearly see and understand that makes AI output bad AI input. This phenomenon is called “model collapse.” Essentially, AI content makes the occasional mistake (unrealistic output, like hands with far too many fingers or made of lobster parts) due to its lack of understanding, and it also tends to focus on producing unremarkable content most of the time. Strangely, these seem contradictory – a woman holding a lobster with hands made of lobster is a very remarkable mistake – but the devil is in the details. People produce plain content or weird content on purpose. AI doesn’t know the difference. It just knows what’s usual or unusual, according to the mountains of examples it’s been fed. And, by and large, it goes for usual, because that’s what people usually want out of their prompts. Trying to “act natural” and failing at it in its own special way is exactly what doesn’t help train an AI model. As the linked article suggests, unusual events occur with low frequency, not zero frequency, but AI models tend to forget that. If you show an AI model a thousand pictures of wealthy corporate boardroom meetings and then ask it to generate one, what do you think are the chances that it’ll produce an image with any women or minorities? Those would be rare in the input data, but they can easily be even less likely to appear in the generated output, unless you modify your prompt to be more specific than just “corporate boardroom.”

Stack Overflow’s AI worries

One corporate boardroom that’s been struggling with AI is that of Stack Overflow, or more accurately, Stack Exchange, Inc., which runs the Stack Exchange network of sites, which includes Stack Overflow. SO (the company) laid off 28% of its staff a few weeks ago, a familiar story to anyone who’s been following the tech sector. It seems like every tech company has had layoffs this year, from Amazon to Zoom. When COVID hit, the Internet – already a pretty big thing – suddenly became an even bigger thing, as every instance of not using the Internet – e.g., working together with coworkers in an office – was hurriedly converted into an instance of using the Internet, if... ahem... remotely possible. Seeing all their services suddenly become several times more popular, many large tech companies massively overhired.

Seeing all their services suddenly become significantly less popular after vaccines were developed and people were no longer penned up in our homes, many large tech companies had massive layoffs. Somehow, COVID managed to disrupt the business world both coming and going, with service sector bankruptcies when people couldn’t go to restaurants or yoga classes or any other non-essential outings, and tech layoffs when people no longer needed as much online connectivity as we did when we were unable to go to restaurants or yoga classes or any other non-essential outings. Hell, COVID was even blamed for completely unrelated problems. Fry’s Electronics, an electronics retail chain in the West Coast’s Bay Area, closed abruptly in early 2021, around the height of COVID. Leadership blamed “changes in the retail industry and the challenges posed by the Covid-19 pandemic.” No, suits, this one was all on you. The retail industry didn’t change around you, and COVID had nothing to do with people not going to Fry’s. The real reason was something called a “consignment purchase.” The usual way that retail stores operate is by buying merchandise from manufacturers at wholesale prices and then selling those products to the public with a markup. What Fry’s wanted to do, however, was buy that merchandise on consignment, meaning, they expected manufacturers to just give them a bunch of free stuff, and then Fry’s would pay them back if the products sold. Unsurprisingly, manufacturers weren’t that stupid, and Fry’s locations became ghost towns, with aisle after aisle of empty shelves. They still had lots of employees, hanging around and doing nothing, but they weren’t willing to actually shell out for things that people might want to buy. The last few times I went to Fry’s, thinking I’d buy a small electronic widget without waiting for shipping from a cheaper online store, I ended up not buying anything. Because they didn’t have anything to buy. Small wonder that this business model didn’t survive. But hey, it wasn’t management’s fault! It was the industry. And COVID. Any scapegoat in a storm.

Baa-a-a

That’s the bleating scapegoat, get it? Anyway, SO needed a scapegoat for the layoffs, and COVID apparently would not suffice, so how about AI! Blaming AI and also of course making their own AI will form the flagstones of a “path to profitability” according to company management. The small facts of how the company “doubled its headcount” just last year and the possibility that maybe that wasn’t sustainable after all were nonfactors. Apparently. It had to be someone else’s fault.

To be fair, text generative AI tools like ChatGPT are indeed quite disruptive to a business model of asking questions in text form and then receiving answers in kind. First, there’s the disruption of opening a firehose of auto-generated garbage masquerading as a human expert in a particular field. As we know, AI just puts things together in familiar patterns. It doesn’t know that certain patterns mean certain things, and 2/3 is not the same as 3/2 even though they look rather similar and have a lot more in common with each other than with, say, a picture of a traffic light. Similar though they look, they are not exactly equivalent, and not interchangeable if we’re trying to simplify 4/6 rather than merely write something that looks like it’s probably a fraction.

For amusement purposes only

If you use AI to create a book cover for a romance novel that will sit with a hundred others on a shelf in a grocery store and the hunk’s abs are a seven-pack instead of a six- or eight-pack, it’s not the end of the world. It’s a curiosity, nothing more. If you ask generative AI how to preserve ICC color profile metadata when using FFmpeg to convert a PNG image to lossless WebP, it may say that you need the -metadata option. Sounds reasonable, right? But it’s wrong. That option is for adding metadata to a file, such as tagging an audio file with an artist or genre. It is not for preserving ICC color profile metadata. This was my first personal test of using the power of AI search to locate a specific answer to a difficult question, as I’d not been able to find it with regular searching or even by asking on Reddit. AI, as it turns out to no one’s surprise, is not great at this task, being better suited to queries like “show me some recipes for pumpkin pie” or “where can I find stylish baby clothes?”. Of course, that first one is also probably not a good thing to ask AI, unless you make sure it’s backed up with a source from a real human sharing a real recipe that will actually work rather than telling you to add sugar three different times while never actually specifying how much pumpkin you need.

Programming is like that, but even more susceptible to errors that may be as small as a single character. Some users on SO were using AI to generate tons of answers that looked very convincing to a cursory glance but were actually wrong, because talking like a programmer is very substantively different from correctly answering a specific programming question. SO moderators protested against this firehose, implementing a policy rejecting such content and banning users who were found to be engaging in its dissemination. Site admins overturned this policy, valuing site traffic over site accuracy. In a way, it’s understandable, as the site has historically struggled with revenue. “How is that possible?” you ask. After all, SO, like most Web 2.0 platforms, is indeed little more than a platform. It doesn’t have to lift a finger to put anything onto the platform, relying on users to populate the website with content. Of course, the platform is, in turn, offered for free to users, supported by ads. So if people don’t keep visiting and viewing those ads, the site loses revenue, and it does take revenue to maintain a website, even just a platform with free content.

First, it is of utmost importance to note that SO was built upon the promise of providing a repository of knowledge that will remain freely available to the programming community that donated its time and expertise to create such a repository. Sure, they can retroactively change the licensing terms of contributed content on a whim with no input from the site’s users, which means that presumably they could do so again and this time switch to a restrictive license that basically says “lol thanks for all the content it’s all ours now,” but, um... but nothing; that’s a real problem. It’s not purely hypothetical, either, as the current AI environment makes a licensing change look like not merely a possibility but a possible solution to an active threat to the platform.

You see, AI tools have scraped the content of the Stack Exchange network. The freely-available content, which can be easily downloaded as a rather large archive from a variety of sources. Most sites that do this do little more than clone the site in hopes of siphoning off some traffic so they can get revenue from their own ads, but clones won’t have the latest content, or the best interface, and so on. It’s better to use SO proper.

Until AI.

It just runs programs

A human expert browsing SO for good questions to answer is an expert, but they are also a human. They have understanding. They have expectations. They expect people to do some research before asking a question. They understand when someone is outsourcing their computer science course to strangers, one question at a time. They might not do exactly what a question-asker wants. AI, on the other hand, will do its best to answer a question (as long as it hasn’t been messed with via a confusing conversation or deliberate instructions to be unhelpful). You can be the laziest jerk in the world with the stupidest question in the world, and AI will treat your query with the same gravity and aplomb as any other question you could possibly ask. This has made it rather popular as an alternative to SO. Instead of searching SO, possibly not finding a suitable Q&A, asking a question yourself, and being told that the answer is in another castle (you wouldn’t believe how many people will lose their godforsaken minds at the mere suggestion that a student might not be a pioneer in the field and their question has been asked before), they just ask AI and get a clear, direct answer.

Too bad it’s probably wrong. Complete, thorough, and addressing every question in a polite and even tone, but probably wrong.

And too bad they don’t even care that it’s wrong. They place greater value on being coddled and sent on their way with garbage than called out on their mistakes but given a correct answer. This is incredibly surprising, for the fraction of a second before you remember that this is people we’re talking about. Of course a soothed ego is more important than getting actual programming help. AI excels at the former and SO does not, so the latter no longer matters. SO is losing traffic and revenue to a stupid robot that doesn’t solve your problem but pretends to very politely.

What is SO doing about this? Well, the first option is asking to be paid. Paid for those data that they promised would be freely available to serve the programming community. Funny how they were so generous with the content until someone outshone them and started hurting their revenue, and now suddenly it’s MY Binky the Clown cup! MINE! MINE! MINE! MINE! There’s probably a name for a gift that the giver doesn’t expect the recipient to ever use, and was only ever given for virtue-signaling. According to the linked article, former GitHub CEO Nat Friedman estimates the value of each quality answer to a quality question to be worth $250 in terms of value to tech companies using that content to train an AI. Well, he didn’t exactly say that’s what this resource is worth, he just meant that tech companies could afford such a price tag for training data, given the expected revenue from offering an AI thus trained.

It’s my money and I want it now!

TWO HUNDRED AND FIFTY DOLLARS? I currently have 1,629 answers on SO alone. Let’s not merely round that off, but truncate it to 1,000. This man thinks my freely-shared expertise was worth a quarter of a million dollars? Let’s back up the truck. How long does a decent answer take? I’d estimate five minutes to an hour, mostly. No one but the very top senior developer executive types are making that kind of money. Junior devs, hired for as little as possible but somehow with the expectation of possessing years of experience in a variety of skills (it’s always amusing to see job postings demanding more years of experience in a framework than it has existed), can’t even dream of that. No, I think it’s safe to say that this $250 figure was pulled out of the air and confirmed as reasonable by doing the math and determining that someone has that much money and could possibly pay it. Should pay it! Right? Well, not to content creators. SO wants money to flow from AI companies to SO and then stop there; the most they’ll do is invest in providing more exciting non-monetary awards.

Another option is for SO to make an AI out of its own data, guaranteeing that ad revenue goes into the correct pockets.

But what happens to SO, the Q&A site, itself after all is said and done? What if everyone migrates to AI? Well, as we’ve discussed, AI is not alive, and it’s never the first to say something new (more or less). If a question hasn’t already been asked and answered on SO and then used to train an AI, that AI won’t come up with that on its own. It can guess, of course, but the further the training material is from the topic at hand, the less likely it is to be useful. Remember, this isn’t a romance novel cover, it’s math and science and a whole bunch of other things that are supposed to have right and wrong answers. If anything, people asking their questions to an AI rather than posting new questions to SO should make SO tidier, with less dross. Old content remains, new content is automatically curated down to things that are actually new content, AI ingests the new content as needed, everybody wins, right? No. SO’s revenue would plummet and the site would disappear. AI models would gradually become outdated, either becoming frozen and not knowing about anything past a certain date or attempting to cannibalize its own “answers” back into itself, each one a losing proposition. SO is the tree branch holding up AI as it harvests the fruit of human labor, and AI needs to avoid sawing off that branch and making a horribly AI-shaped crater in the ground far below. While the tree shrivels up and dies. And then bursts into flames. And explodes.

Kaboom

Speaking of exploding, know what could also be bad for AI? Legal issues! As one ought to expect with anything new, the legal landscape is totally unprepared for it, because laws are made reactively rather than proactively. With very few exceptions, laws are written to solve extant problems, like bug fixes for society, rather than address the much larger set of hypothetical problems that might occur in some branch of reality. AI appeared so suddenly and raised so many new questions that society must first form an opinion about it all. The biggest issue so far seems to be that of copyright – on both ends. On one end is the concern of the copyright status of an AI’s training data, and on the other end, that of a generative AI’s output. To no one’s surprise, people and organizations that create content which gets used to train AI are by and large firmly of the opinion that this process is theft, and they want to be paid for any work that’s used in a training dataset. Author and comedienne Sarah Silverman is at the vanguard of content creators suing OpenAI and Meta for copyright infringement, alleging that the various AIs’ training datasets included online collections of pirated ebooks. Virtually every major AI with an enormous dataset is or can be expected to come under fire from similar claims. After considering a lawsuit against OpenAI for several months, the New York Times finally abandoned negotiations and filed the lawsuit. Songs generated using existing artists’ voices have already begun to pop up and attract lawsuits. Getty Images is suing Stability AI, makers of image generator Stable Diffusion, for possibly training the latter’s AI on the former’s images. They don’t have a problem with AI per se, they just want to keep their piece of the pie, as they’re also producing their own AI trained on their own images. They promise they’ll compensate the people who contribute actual images, with the specifics doubtlessly entirely dictated by the company and handed down in a dense licensing agreement update. GitHub is being sued over the claim that it not only stores and copies code but also knows this and deliberately varies its output, like the “can I copy your homework?” meme but this time it’s not just a mockbuster or a cloned game and lots of people are very angry.

Clear as mud